Here are the steps to install the MySQL database, create a schema named as metastore and subsequently update the schema by executing hive-schema-2.3.0.mysql.sql.

Step 2: MySQL Database Installation for Hive Metastore Persistence and MySQL Java ConnectorĪs said above, both Hive and MySQL database has installed in the same DataNode in the cluster. Update hive-env.sh available inside conf dir with the HADOOP_HOME and HIVE_CONF_DIR

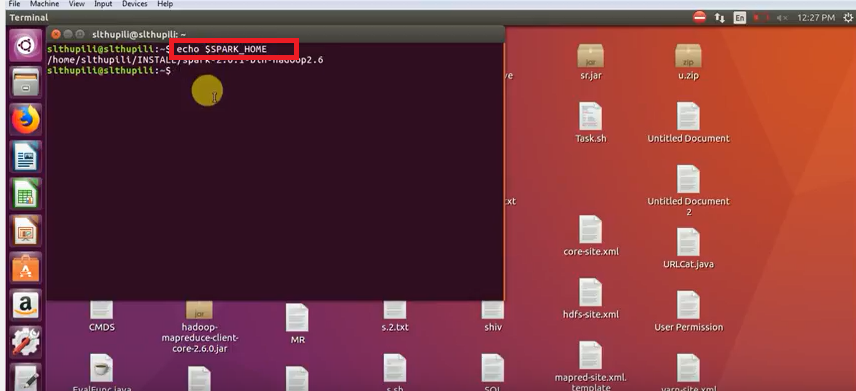

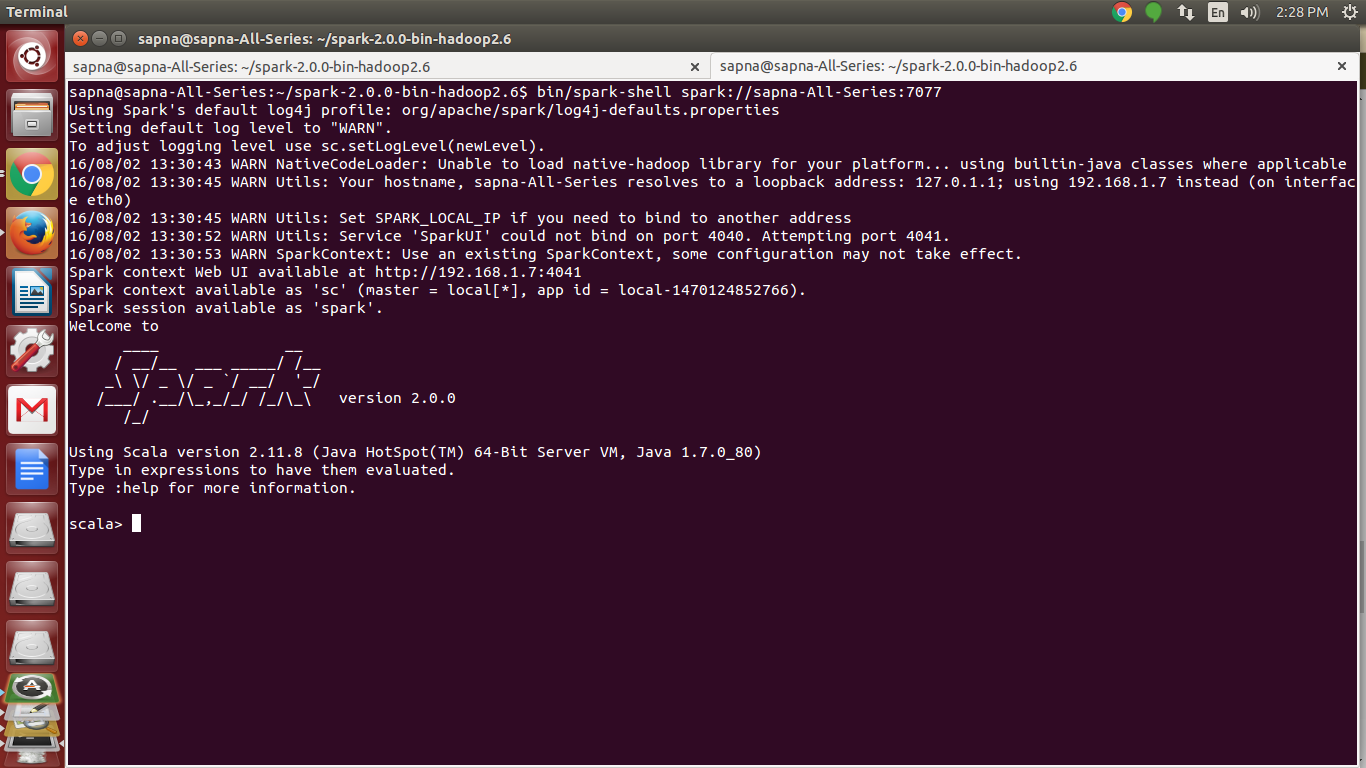

Copy hive-env.sh.template to hive-env.sh and update with all read-write accessĦ. Please re-login and try below to check the environment variable.ĥ. Update the ~/.bashrc file to accommodate the hive environment variables.Ĥ. Extract the previously downloaded apache-hive-3.1. from the terminal and rename it as a hive.ģ. Here, the used DataNode has 16GB RAM and 1 TB HD for both Hive and MySQL.Ģ. Select a healthy DataNode with high hardware resource configuration in the cluster if we wish to install Hive and MySQL together. Step 1: Untar Apache-Hive-3.1. and Set up Hive Environmentġ. Installed and used Server version: 5.5.62 It can be downloaded from the Apache mirror. Please go through the link if you wish to create a multi-node cluster to deploy Hadoop-3.2.0. Existing up and running Hadoop cluster where Hadoop-3.2.0 deployed and configured with 4 DataNodes.Here is the list of environment and required components. Apache Hadoop - 3.2.0 was deployed and running successfully in the cluster. In a future post, I will detail how we can use Kibana for data visualization by integrating Elastic Search with Hive. The objective of this article is to provide step by step procedure in sequence to install and configure the latest version of Apache Hive (3.1.2) on top of the existing multi-node Hadoop cluster. Ideally, we use Hive to apply structure (tables) on persisted a large amount of unstructured data in HDFS and subsequently query those data for analysis. Hive can be utilized for easy data summarization, ad-hoc queries, analysis of large datasets stores in various databases or file systems integrated with Hadoop. The Apache Hive is a data warehouse system built on top of the Apache Hadoop.

0 kommentar(er)

0 kommentar(er)